High-level questions

In the emerging age of Artificial Intelligence and Machine Learning, the MAHALO SESAR Exploratory Research project aims to answer simple, yet profound questions: should we be developing automation that is conformal to the human, or should we be developing automation that is transparent to the human? Do we need both? Further, are there tradeoffs / interactions between the concepts, in terms of air traffic controller trust, acceptance, or performance?

Conformance

The apparent strategy match between human and machine solutions. This similarity is

external, overt, and observable, and is the extent to which cause and effect can be observed.

Transparency

The extent to which aspects of the automation’s inner process underlying a solution can

be observed and explained in human terms.

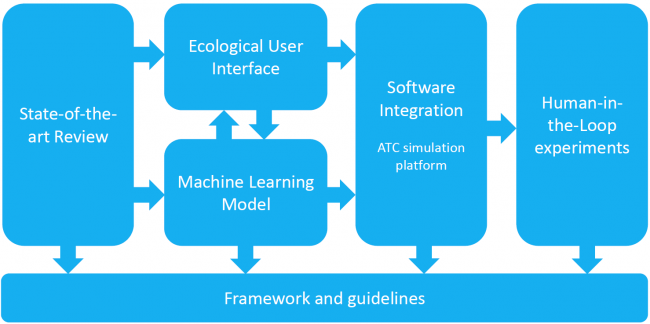

MAHALO Objectives

- Create and demonstrate a ML system comprised of layered deep learning and reinforcement models, that is

trained on controller performance, control strategies, and eye scan data, and which learns to solve ATC

conflicts; - Develop both a control model of ATC, and an associated Ecological Under Interface (E-UI) which — when operating in automated mode — augments the typical plan view display (PVD) with machine intent and decision selection rationale (to

help foster transparency); - Experimentally evaluate, using human-in-the-loop (HITL) simulations, the relative impact of conformance

and transparency of advanced AI, in terms of e.g. controller trust, acceptance, workload, and

human/machine performance; and how these effects are impacted by factors such as air traffic complexity,

or degraded mode operations; - Define a framework to guide development of future AI systems, including guidance on the effects of

conformance, transparency, complexity, and non-nominal (degraded mode) conditions.

MAHALO approach

In the MAHALO project, the following techniques will be developed and empirically explored to address conformance and transparency.

Conformance: Hybrid Supervised & Unsupervised Learning

The core task of air traffic controllers is conflict detection & resolution. For conflict detection, supervised learning techniques (for example, convolutional neural networks) will be explored to analyze controller data and develop generic (“one-size-fits-all”) and individualized prediction models. For conflict resolution, (Deep) Reinforcement Learning will be used that is capable of mimicking the human controller (high conformance) as well as proposing more optimized solutions (low conformance). The challenge here is to discover the “features” that capture the breadth of human decision-making in dynamic air traffic control tasks.

Transparency: Ecological Interface Design

Any form of automation, whether based on AI techniques or a set of standardized rules / procedures and logic, has the tendency to be designed as a “black box.” In MAHALO, the outputs of the machine learning models will be made transparent and explainable by adopting the Ecological Interface Design framework. Ecological interfaces typically portray the physical and intentional system boundaries that define the playground in which both human and automated agents can act safely. It will thus serve as a common ground or “shared mental model” between human and automated agents. The design challenge here is to balance interface complexity (e.g., clutter) against usability.

Experimentation

Issues in human-automation interaction are some of the most difficult problems to solve. There is neither an easy-to-follow recipe nor a mathematical formula that captures and optimizes the dynamics of interaction. In MAHALO, a series of real-time, human-in-the-loop experiments will provide empirical insights into the impact of conformance and transparency on air traffic controllers’ trust, acceptance, system understanding and performance. By purposefully manipulating levels of conformance and transparency, MAHALO aims to answer the high-level research questions.

Project overview / timeline